You probably heard that we are running out of static IPv4 addresses. Actually, we ran out in 2011! IPv4 provided 4.3 billion IP addresses, which is not enough to give everyone their own IP address. IPv5 suffered the same fate–not enough IP addresses–so, enter IPv6, which uses 128-bit addressing compared with IPv4’s 32-bit addressing. Instead of 4.3 billion IP addresses, we now have approximately 3.4 x 10^38 IP addresses to choose from–that’s more than 10^28 IP addresses for each of us! BTW, that’s better than telephone numbers, which will eventually run out, too.

I wanted to see if I could upgrade the website that holds my genome, http://genome.startcodon.org, to IPv6. What prompted the whole thing was AWS’ announcement that they were going to start charging for IPv4 addresses beginning in February 2024. I thought it might be a good time to migrate to IPv6 and save a little money. (The charge per EC2 instance is $0.005/hour, or about $4 per month.)

TL;DR IPv6 is tricky; we are still not ready for it, and I am not saving any money.

The first place to start is with your computer. Use https://test-ipv6.com to see if you have a local IPv6 address. If not, you probably have to fix your router. I am using an Orbi RBR850, and the instructions to turn on IPv6 were straightforward.

After fixing my router to speak IPv6, I thought I could declare victory and move on. Alas, no. Two days later I was sitting in a hotel room attempting to access my now IPv6-enabled website, http://genome.startcodon.org. But I couldn’t, so I tried https://test-ipv6.com/ to see what was going on. Sure enough, the hotel was not providing IPv6 addresses! So, back to square one.

It turns out that you can migrate to IPv6 (and I did), but keeping both IPv4 and IPv6 addresses running on your website maximizes interoperability for those folks who have not yet made the transition to IPv6. I suspect that we’ll be using IPv4 in combination with IPv6 for a long time.

The checklist

Here’s a non-exhaustive list of some things to keep in mind when migrating to IPv6. Good luck!

- Your local network must assign an IPv6 address to your computer, probably using DNS.

- Your ISP must support IPv6 natively or support a translation mechanism between IPv4 and IPv6.

- On AWS, your VPN, subnet, routing table, network interface, and security group must be enabled to support IPv6.

- If you are using an S3 bucket, you must enable dual stack mode to support IPv6.

- Optional DNS entry: Update your AAAA record (the IPv6 version of the A record) to point to your host.

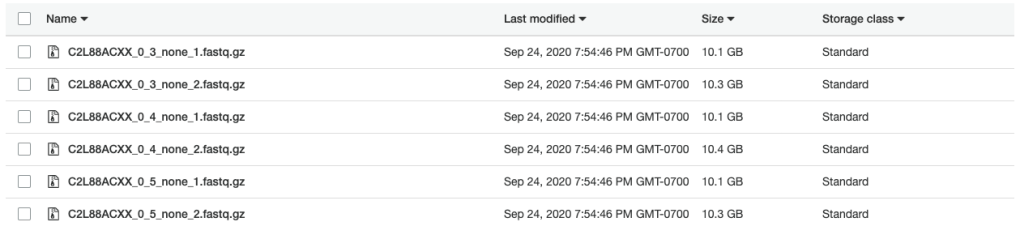

I got genomed by Illumina

I got genomed by Illumina